Raw audio processing

In some scenarios, raw audio captured through the microphone must be processed to achieve the desired functionality or to enhance the user experience. Voice SDK enables you to pre and post process the captured audio for implementation of custom playback effects.

Understand the tech

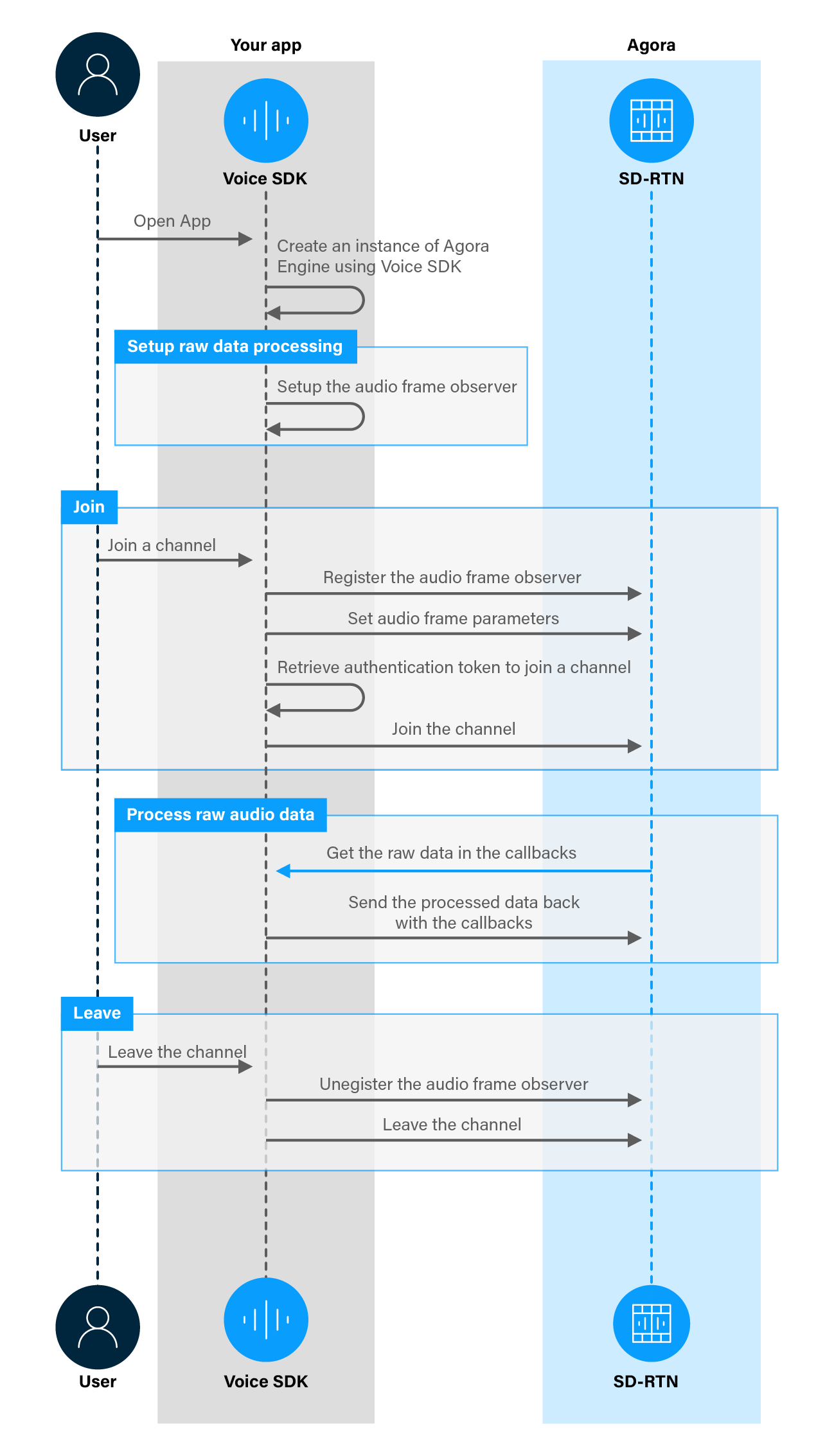

You can use the raw data processing functionality in Voice SDK to process the feed according to your particular scenario. This feature enables you to pre-process the captured signal before sending it to the encoder, or to post-process the decoded signal before playback. To implement processing of raw audio data in your app, you take the following steps.

- Setup an audio frame observer.

- Register the audio frame observer before joining a channel.

- Set the format of audio frames captured by each callback.

- Implement callbacks in the frame observers to process raw audio data.

- Unregister the frame observers before leaving a channel.

The figure below shows the workflow you need to implement to process raw audio data in your app.

Prerequisites

To follow this procedure you must have implemented the SDK quickstart project for Voice Calling.

Project setup

To create the environment necessary to integrate raw audio processing in your app, open the SDK quickstart Voice Calling project you created previously.

Implement raw data processing

When a user captures or receives audio data, the data is available to the app for processing before it is played. This section shows how to retrieve this data and process it, step-by-step.

Handle the system logic

This sections describes the steps required to use the relevant libraries, declare the necessary variables, and setup audio processing.

Import the required Android and Agora libraries

To integrate Video SDK frame observer libraries into your app, add the following statements after the last import statement in /app/java/com.example.<projectname>/MainActivity.

Process raw audio data

To register and use an audio frame observer in your app, take the following steps:

-

Setup the audio frame observer

The

IAudioFrameObservergives you access to each audio frame after it is captured or before it is played back. To setup theIAudioFrameObserver, add the following lines to theMainActivityclass after variable declarations: -

Register the audio frame observer

To receive callbacks declared in

IAudioFrameObserver, you must register the audio frame observer with the Agora Engine, before joining a channel . To specify the format of audio frames captured by eachIAudioFrameObservercallback, use thesetRecordingAudioFrameParameters,setMixedAudioFrameParametersandsetPlaybackAudioFrameParametersmethods. To do this, add the following lines beforeagoraEngine.joinChannelin thejoinChannel()method: -

Unregister the audio observer when you leave a channel

When you leave a channel, you unregister the frame observer by calling the register frame observer method again with a

nullargument. To do this, add the following lines to the 'joinLeaveChannel(View view)' method beforeagoraEngine.leaveChannel();:

Test your implementation

To ensure that you have implemented raw data processing into your app:

-

Generate a temporary token in Agora Console .

-

In your browser, navigate to the Agora web demo and update App ID, Channel, and Token with the values for your temporary token, then click Join.

-

In Android Studio, open

app/java/com.example.<projectname>/MainActivity, and updateappId,channelNameandtokenwith the values for your temporary token. -

Edit the

iAudioFrameObserverdefinition to add code that processes the raw audio data you receive in the following callbacks-

onRecordAudioFrame: Gets the captured audio frame data -

onPlaybackAudioFrame: Gets the audio frame for playback

-

-

Connect a physical Android device to your development device.

-

In Android Studio, click Run app. A moment later you see the project installed on your device.

If this is the first time you run the project, grant microphone access to your app.

-

Press Join to hear the processed audio feed from the web app.

Reference

This section contains information that completes the information in this page, or points you to documentation that explains other aspects to this product.